New look, new apps, new browser.Here's what we think of Microsoft's new OS...

I have

been testing Windows 10 for two months on my desktop and laptop, it is the

best PC experience I had after Windows XP. The Start Menu is back with best of

best features and even though it is familiar it is also fresh!!!

I liked

the direction Microsoft is taking with Windows 10, accepting feedback and ideas

from its customers and professionals along the way. It feels like best way to

shape Windows into something which people love to use not what they are forced

to.

Windows

10 delivers a refined, vastly improved vision for the future of computing with

an operating system that's equally at home on tablets and traditional PCs.

This new

Operating System combined the best of old and new windows feature making it

best OS from Microsoft till date while correcting all mistakes it made in

Windows 8.

What’s new?: Windows 10 fixes a

lot of the irritations found in Windows 8 by improving the interface for

desktop computers and laptops, including the return of the Start menu, a

virtual desktop system, and the ability to run Windows Store apps within a

window on the desktop rather than in full-screen mode.

Windows 10 is a

universal platform that runs across all devices such as Windows Phones, surface

tablets, servers, data centers and games consoles.

Adjustable

Start Menu: Best

Start Menu Till Date: The Start menu will default to a narrow column, but users

can drag around the margins to their liking. Fans of "Live Tiles,"

those icons that quick launch apps, may want a broader canvass.

Spoken

Reminders: Hit the

mic icon in the search bar, and the digital assistant Cortana will listen for

spoken commands. Cortana can then feed the relevant information directly into calendar,

email, reminder and calculator apps. Try saying "Remind me to buy new

watch tomorrow at 6 pm," and you'll get a sense of the possibilities.

"Hey

Cortana:" Really

chatty users can go into Cortana's settings and flip on "Hey

Cortana." The digital assistant will then wake up at that very same voice

command.

Notebook: Cortana follows your search and

browsing habits in an attempt to decipher your personal tastes. Cut to the

chase by hitting the notebook icon in Cortana's settings and filling out your

preferences directly. More privacy minded users can also cut off Cortana's

senses by hitting "Manage what Cortana knows about me in the cloud."

Refined

Searches: The search

bar embedded in the Start screen simultaneously searches your personal files

and the web. For a tighter focus, you'll notice two buttons appear as you type

a search term. One offers to search "My stuff," the other, the

"Web." Select according to your needs. My stuff will show the files,

folders inside your PC.

Forget-Me-Not

Files: Can't

remember the name of that PowerPoint deck? Enter the file type ".ppt"

in the search bar, and it will pull up every saved PowerPoint file, sortable by

relevance or recency. Ditto, Word docs and Excel spreadsheets.

Everything Runs in a Window: Apps from the Windows Store now open

in the same format that desktop apps do and can be resized and moved around,

and have title bars at the top allowing for maximize, minimize, and close with

a click

Snap Enhancements: You can now have four apps snapped on

the same screen with a new quadrant layout.

Windows will

also show other apps and programs running for additional snapping and even make

smart suggestions on filling available screen space with other open apps.

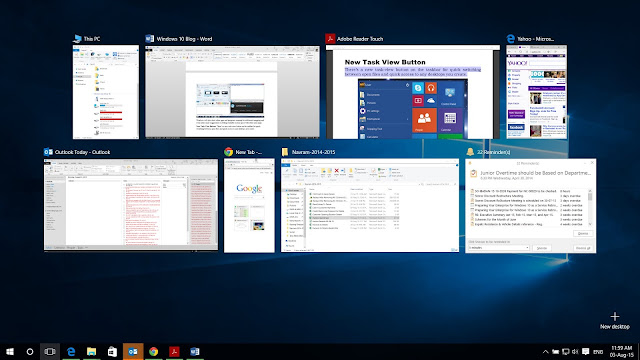

New Task View Button: There’s a new task-view button on

the taskbar for quick switching between open files and quick access to any

desktops you create.

Multiple Desktops: Create desktops for different

purposes and projects and switch between these desktops easily and pick up

where you left off on each desktop. This helps me a lot working on multiple

projects at a time.

Find Files Faster: File Explorer now displays your

recent files and frequently visited folders making for finding files you’ve

worked on is easier. This is really very helpful feature for professionals and

students who are working on multiple projects.

Continuum: For convertible devices, such as the

Surface, there are two modes, tablet and desktop. When using the device as a

tablet, Windows 10 will automatically change to tablet mode which is more

touch-friendly. This means apps will run full screen and allow you to use touch

gestures to navigate.

Once you

connect a mouse and keyboard, or flip your laptop around, Windows will go into desktop

mode. Apps turn back into desktop windows that are easier to move around with a

mouse and you’ll see your desktop again.

Microsoft Edge Web Browser: I don’t remember when I used

Internet Explorer last time, Thanks to all new Web Browser Edge, Yes Codenamed

“Spartan”, Microsoft Edge is Microsoft’s new web browser replacing Internet

Explorer. A lot more light weight than its predecessor and allows you to

annotate and share these with others on the web. It is very fast, reliable,

with loads of useful features which makes Edge as best web browser available today.

Reading List:

The star icon in

Microsoft Edge doesn't just add a webpage to your favorites list. You'll notice

a second option to save a story to a "Reading List." The browser will

then automatically save the headline, the picture and the link inside of a handy

side menu, which slides out of view until you're ready for some heavy duty

reading. It will help a lot for users who has limited availability to internet accessibility.

Marginalia:

Microsoft Edge

includes a pen and notepad icon in the upper left hand corner. Hit it, and Edge

will convert the webpage into mark-up mode. Use digital ink, highlighters and

text boxes to mark up the page. Use the share icon to email or save your web

clippings.

New Calendar App: A new calendar app that allows you

to link into your outlook calendar and your email address and now runs in a

window rather than full screen.

New Mail App: The new mail app has had a facelift

and allows you to link in with your outlook email or your Microsoft account

email address.

Photos App: The photos app is a nice little way

to organize your photos and works whether you are on a tablet, phone or desktop

PC. It will import photos directly from your digital camera or on-board camera

if you are using a tablet or phone.

Now we can

also perform minor corrections and enhancements such as removing red-eye,

lightening up a dark photograph and apply some simple effects such as sepia or

black and white. To do this, tap or click on an image, this will open the image

in view mode.

As you move

your mouse or tap on the image a tool bar will appear along the top. This will

give you some options to share a photograph via email or social media, see the

image full screen. You can tap the magic want icon to perform some automatic

adjustments such as brightness, contrast etc. You can also tap the pencil icon

to do your own editing and photo enhancements. These features were much awaited

in default Photo App from Microsoft.

Verdict: Windows 10 works well, and didn’t

break any of my older Windows software. The launch is just the start. Microsoft

intends to continuously upgrade it over time, which the user has no choice

about as you can’t turn updates off without becoming unsupported. There is a

Microsoft tool to hide or block unwanted driver updates, however. The great

news is you will not need any additional device driver if you already have

drivers for previous version of Windows.

Posted By: